The 4 Rare Leadership Skills We Need More Than Ever In The AI Era

A new essay from Shane Snow

During the early months of the pandemic, I wrote a Forbes column that went viral. The subject was four rare leadership skills that I believed the world would need to get through the big changes that COVID was wrecking on us.

Today, I think we’re on the precipice of an even more massive disruption than COVID. Less violent, for sure. Less sudden, perhaps. But more disruptive.

I’m talking about AI, of course. Which as Stanford put it this year in its annual AI Index Report, “Business is all in on.” The trepidation and anxiety that prevailed a couple years ago has become an all-out race to build and implement AI faster than the other guy.

This is happening at the micro level (one report claims that 57% of workers are taking classes on their own time to get better than their colleagues), the macro level (think tanks with politicians’ ears are flinging out reports about how the US needs to beat China at AI), and at every leadership level in between.

As you can tell from the links so far in this post, I’ve been reading a lot of large-scale survey reports and studies from non- and for-profit companies about “leadership” and “AI.” (About twenty so far this year and counting.)

I have two main conclusions from all these reports:

CONCLUSION 1: The Wave Is Coming Whether We Like It Or Not

Fewer leaders are asking “should we?” rather than “how do we?” when it comes to the AI race.

That means that you and I are living in a world that will continue to race toward AI development and adoption. Depending on your level of optimism, the ship is sailing—or the tidal wave is coming.

This does not mean that we should give up on using our brains and take our hands off the steering wheel. It does not mean that we should not use our influence to protect against the risks, or to think ahead.

It means that we need to operate with the assumption that the powers that be are not going to stop AI from growing. So we need to make smart decisions within that new reality.

CONCLUSION 2: Long-Term Leadership Requires Four Essential Soft Skills

As AI gets better and better at “hard skills” like math, programming, generating, and executing commands, the need for human leaders with strong “soft skills” is growing.

In fact, specifically the four leadership soft skills that were necessary to guide us through the pandemic are true for tomorrow’s leaders as we navigate a tidal wave of change and uncertainty that the coming years of the AI Era will bring.

I’d like to recap those four skills with that looming tidal wave in mind:

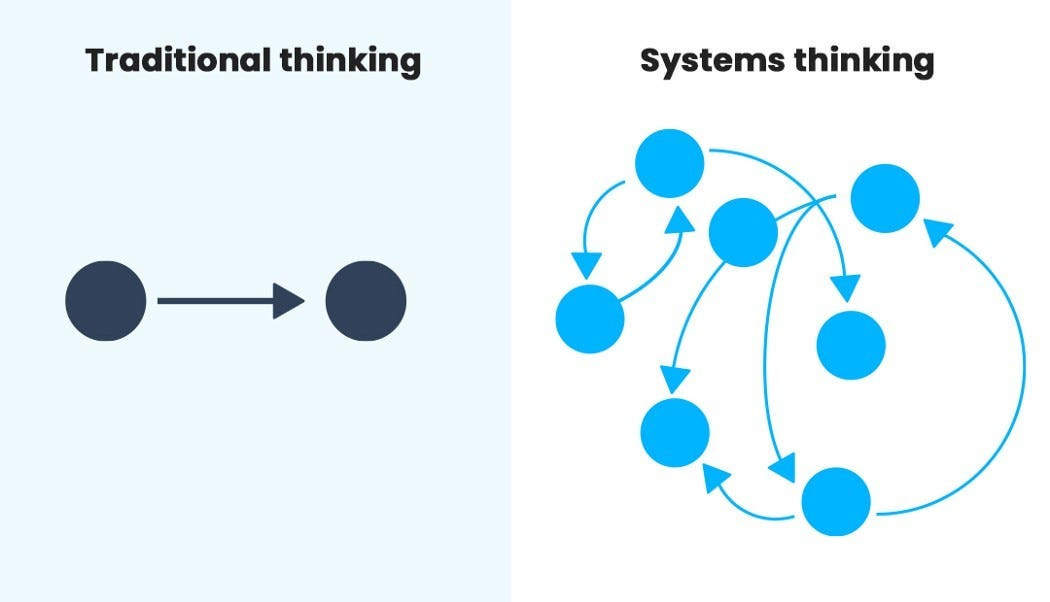

AI-Era Leaders Need To Be Systems Thinkers

Right now, AI growth and adoption is being fueled by competition. The race to get ahead, or to not get left behind. If history is a guide, there will be a transition period (I believe we’re in it) where things are especially uncertain and crazy, and we’ll eventually settle into a “new normal” where, despite continuing rapid change, we’ll be used to the paradigm of an AI-tech-powered world rather than a mobile-internet-powered world in which AI is starting to turn things upside down.

To survive until then—and to thrive once we’re there—we’re going to need leaders who don’t make hasty decisions that screw us over later.

I.e. we’re going to need more “Systems Thinking.”

As I wrote previously:

Systems are combinations of things that *should* exceed the sum of their parts. A rainforest is more than its flora and fauna; the interconnection between those helps all the rainforest’s life thrive more than it would alone.

Same with human systems.

The actions of one city, or business, or manager almost always have second-order effects on the whole system. Tomorrow’s great leaders will be aware of that and make decisions in terms of the greater system, not just their own immediate priorities.

Tomorrow’s best leaders will understand that systems thinking applies to their own people as well. The team you lead must be smarter than you.

The leader’s job is to unlock the potential of the larger system (team), not to be the bigshot who has all the ideas on his/her/their own. This means that tomorrow’s leaders will need to understand how cognitive diversity works—and use it.

You’ll be hearing more from me on Systems Thinking in the coming weeks as part of my Thinking Ahead series from my new keynote and upcoming book.

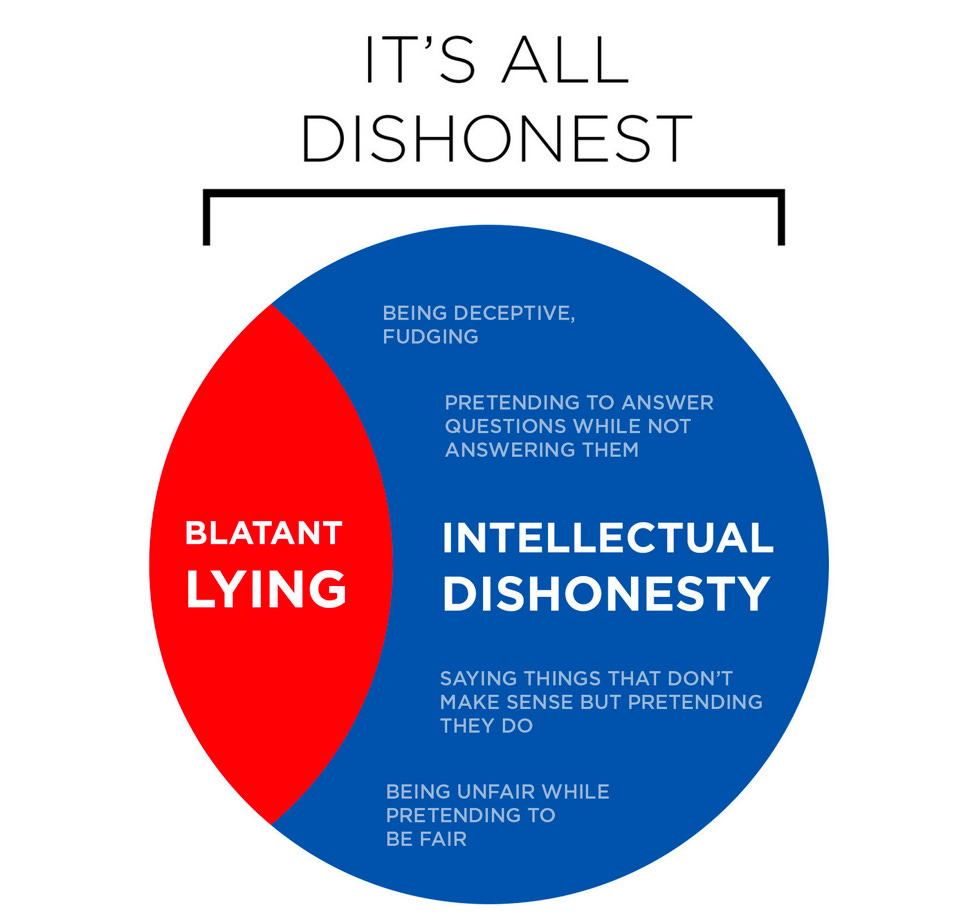

AI-Era Leaders Need To Be Intellectually Honest

In digging into all of these AI studies lately, I’ve had to stop and take a deep breath a few times.

One of those times was when I realized that for the third time I’d been bait-and-switched by a company advertising an “AI Leadership Report” that turned out to be a brochure for their company.

I believe it’s because when you search the web for things like “AI Leadership Report” you’ll get Sponsored Results that automatically through the magic of advertising put your search term into the headline. And so you end up on a landing page for a construction company asking for your email in exchange for an “AI Leadership Report”—in which the landing page automatically inserts your search term as well.

These are companies I will never do business with, now that I’ve been tricked.

And yet, these kinds of things are features of today’s business ecosystem. It’s become normalized to overhype, sell a product before it exists, and “fake it til you make it”—in fact, this is a core flaw in how today’s AI treats us.

As I wrote before:

There’s a difference between teeeeechnically not telling a total lie… and being truly honest. Unfortunately, for too long the world has rewarded those who are able to take advantage of others through information asymmetry or intellectual dishonesty.

If you often have to wriggle out of the things you say—instead of being clear, and admitting when you’re wrong—you can’t be counted on in the way the world needs now.

In a world as complex and connected as ours, the collaboration we need in order to make our systems work (see above) requires more transparency and more honesty. So tomorrow’s great leaders will need to take integrity to a new level.

The leaders we need tomorrow are the ones who we’ll trust because we’ll see them not only not trick us, but also come back and tell us when they’ve realized they’re wrong. Because in a world that’s changing as fast as this one, no one is going to be right all the time.

Which leads us to…

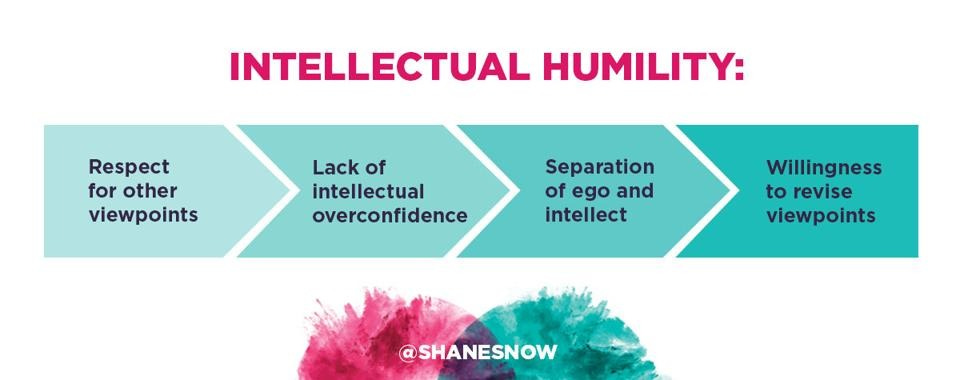

AI-Era Leaders Need To Be Intellectually Humble

As I wrote in my original column:

Helping a team of people to unlock its potential to be smarter than you requires humility—for sure. But I think that tomorrow’s leaders need to have something more specific than that. They need intellectual humility.

That is, they need to be aware of the limits of their own knowledge, and to be willing to change their minds in light of new information—no matter how hard it is or how bad it looks.

Unless you plan on only handling the challenges that have already been handled in the past, it’s a huge mistake to pick leaders who are stoic and immovable. We need leaders who are adaptable.

When it comes to leadership, flexibility is the new strong.

If AI is indeed going to keep getting smarter, then:

More tools will keep emerging

More jobs will keep changing

More businesses will need to change strategies

More decisions will have more ripple effects (see “Systems Thinking” above!)

The leaders that will get us through all that will need to be adaptable—and they’ll need to help us be adaptable too.

AI-Era Leaders Need To Be Empathetic & Charitable

I’m going to make a prediction about the future that you can 100% hold me to: We will all make mistakes. We will all make decisions we think are the right ones and realize later were wrong, once we have more information.

We are going to need leaders who treat other humans as if they have the best intentions when things go wrong. We will need to foster culture where we prioritize and foster and value human dignity.

As I wrote previously:

We live in a world where it’s increasingly impossible to avoid people who aren’t just like us. And that’s a good thing; innovation is built from different ways of thinking and culture-add rather than culture fit.

But whereas in the past we could get away with having leaders who just looked out for themselves or “their people,” tomorrow we’re going to need leaders who are able to put themselves in the shoes of everyone. Among all the leadership traits that people are clamoring for right now with what’s going on today, empathy ranks at the top.

But I think that understanding where people are coming from isn’t going to be enough. Our leaders are going to need to model kindness and nonviolence in a way that goes above and beyond what many people would call “fair enough.”

It’s highly unlikely that we’ll be in many situations again where there’s no amount of past baggage, past injustice, past trauma, or present immaturity won’t be at play in the big decisions and problems we tackle. So we’re going to need leaders who are models of treating people charitably.

We need the leader who, as Dr. Martin Luther King once said, “not only refuses to shoot his opponent but he also refuses to hate him.”

This is the kind of leadership we need to reestablish trust. It’s the kind of leadership that will not just solve new problems, but be able to look at our existing systems that create problems—including inequality and xenophobia and outdated thinking—and re-invent those systems.

Leaders who do this will hang on to their teams, and be able to lead them through hard things.

I’d like to end this essay the same way I ended my original column:

Imagine for a minute that every leader of every company and country was a systems thinker who was intellectually honest and humble, and who practiced empathy and modeled charity.

Can you imagine how much we’d get done together?

Can you imagine how we’d handle the next global outbreak?

The next big tragedy?

Now, some may say that the kind of aspirational leadership I’m describing is a pipe dream.

But then again, it was Eleanor Roosevelt who said, “The future belongs to those who believe in the beauty of their dreams.”

Pass this along if you agree.

–Shane

If you liked this post, you may also like my keynotes and courses on working smarter and getting better together:

Great post, Shane. I wonder how you square the importance of intellectual honesty with the incentive system of the attention economy. Sam Altman wins by continually saying outlandish BS in the press, vacillating between straight-up lies about AI's development timetable and predictions of AI's existential threat. The market rewards him; he controls the narrative, earns media and attention, and OpenAI's market share and valuation goes up and up. A startup like Cluely gets $15M in funding for an AI that'll help you cheat ... that isn't even real. We need intellectually honest leaders ... but doesn't the game also reward intellectual dishonesty?

All of this is true, Shane, thank you. I personally think that for the time being AI should be totally banned for political use and carefully but strictly limited for personal use. That being said, it has its upsides. Anyway it's been (is being) imposed on the translation industry and in my experience is more an annoyance than a real help other than as a writing tool that saves me from further carpal tunnel - unless I have the option to train it myself on the platforms and in the tools I use, and it then *stays* trained and doesn't try to override over 40 years of experience in my linguistic and industry fields. If it does, then I send it sarcastic notes and put everything back the way it should be, but that's time I'm not paid for. That's my personal beef with it. "Hal" is not what I want; but I'm all for a tool that helps with typing, consistency and handling regular client glossaries. And if AI can advance climate, medical and scientific research without closing the outside bay door I'm good with that, too. But we the users need to be AI's masters, not a small group of shadowy whoevers. Take care.