THINKING AHEAD: The Human Skills That Will Help Us Adapt To AI (And Not Die)

A new book and keynote speech from Shane Snow

What do insurance brokers, genetic disease researchers, sitcom marketers—and the 811 number you’re supposed to call when you’re about to dig a hole in the street and don’t want to hit a gas pipe—all have in common?

The answer has to do with the Tang Dynasty in ancient China.

In the 9th century, Chinese alchemists who were trying to make gold discovered something unexpected: If you mix sulphur, charcoal, and saltpeter... it explodes.

Put this mixture on an arrow, and you can set something on fire from very far away.

Thus was invented gunpowder. Soon after, all sorts of weapons—rockets, cannons, something called a “flying incendiary club for subjugating demons”—made their way onto the battlefield in China. And, with the help of Genghis Khan, into the Middle East and Europe.

The thing about gunpowder is it didn’t just give armies new weapons to fight with. It completely disrupted the social and economic order of Europe.

It wasn’t just a “better weapon.” It was a platform from which myriad deadly (and some genuinely useful, not-deadly) inventions could spring. And those inventions made the knight, the castle, and eventually the entire feudal system (of lords, serfs, and private armies) obsolete.

With gunpowder, any idiot could shoot straight through a highly trained knight’s armor. A peasant on foot could take down a world-class cavalry rider. With gunpowder, a cannon could blast through a castle wall. Gunpowder was democratizing and terrifying.

Believe it or not, that invention from the Tang Dynasty alchemists led to a restructuring of the entire world. Those with any power at all faced the choice of “adopt gunpowder and adapt to a new system” or “f*cking die.”

Lords who paid private knights to enforce their little fiefdoms got wiped out by poorly-trained armies with guns. Lords who adapted in time consolidated power and became kings. To stay on top, they created systems of taxation that allowed them to maintain standing armies of infantry with guns.

Feudal society collapsed. The nation-state rose up from the rubble.

Ok, so what does this have to do with the 811 people you call when you need to dig a hole in the street?

Over the last two years, I’ve given keynote speeches across a crazy variety of industries—from insurance to genetic research to sitcom marketing, from 30 Rock to San Juan to Columbus. (Including the 811 hole digging folks, who are awesome, btw!) This is not unusual for me. What’s unusual is that in the last two years I have heard versions of the same three worries from the leaders at every single event I’ve spoken at:

“AI is going to give our competitors an advantage if we don’t adopt it too.”

“We need to incorporate AI into our strategy, but things are changing so fast we don’t want to lean into the wrong things.”

“Will AI put our people out of a job?”

Never in my 20-year public speaking career have I heard the same consistent concerns from so many different types of audiences.

Leaders of companies right now are in the position of the feudal lords during the introduction of gunpowder. Adapt—or f*cking die.

And here’s the thing: If all these big companies are freaking out, that means this is also happening on a micro level. Meaning we are all affected by this.

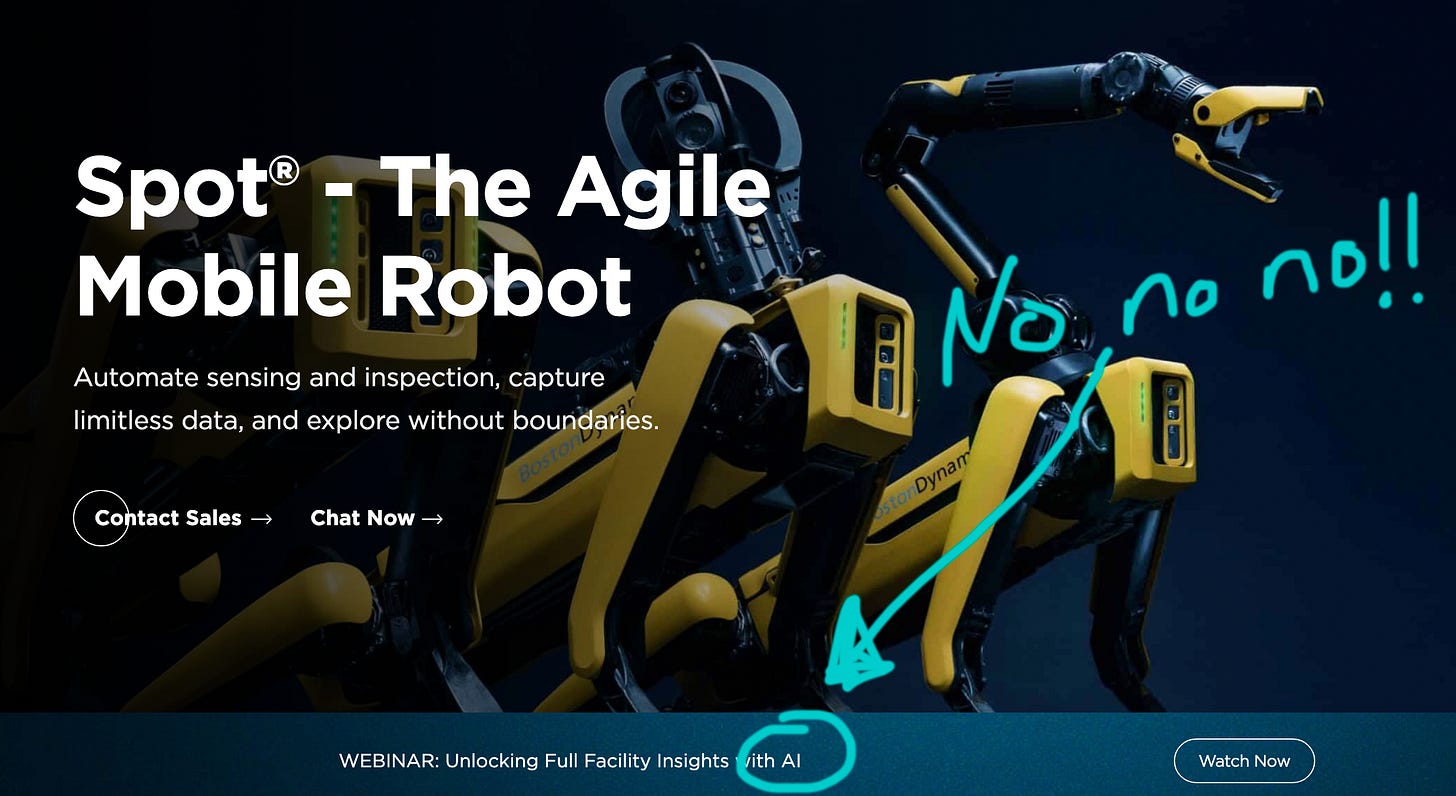

I don’t need to give you the stats. AI chatbots have a bazillion users. Entry level computer programming jobs are being wiped out by AI tools. Animation jobs are next. The US economy is being propped up by the massive investments into AI data centers. Those Boston Dynamics guys are even talking about “AI” on the page advertising those terrifying robot dogs.

Corporate leaders might be thinking of adapting to AI as necessary to maintain growth, but OpenAI is also putting gunpowder in the hands of every untrained peasant in the world.

What do we do with it?

What should we do with it?

The Power of “Thinking Ahead”

Here’s a question: If gunpowder was developed in China, why didn’t China take over the world?

The answer, though we don’t have perfect records of the time period, is likely because the Mongols and Europeans adopted gunpowder weapons so quickly that China wasn’t able to use it as a strategic advantage fast enough. Too slow—now everybody has the same tech.

You already know where I’m going with this. Organizations can’t rely on being the first to adopt new AI technology as a strategy. Individuals can perhaps adopt fast enough to gain short-term advantages. But by the time that new AI workflow tool goes through your company’s compliance department… your competitors have it too.

What will give us an advantage is inherently human. Not technological.

Our advantage is going to be our ability to think differently than folks who are all using the same AI tools:

It’s going to be our skill at asking sharper questions.

Our ability to make smarter decisions that weigh human values.

Our ability to create optionality while we move quickly enough to compete.

To collaborate with others and combine their human differences into synergy.

To extract unique value from new technologies, not the same answers everyone else extracts.

If you follow this Substack, you’ll have noticed that I’ve been writing a lot about decision making lately. That’s because “good decision making” is one answer to a question I’ve been working on over the last year:

“What mental and social tools do we humans need to successfully navigate massive changes like the ones AI are bringing about?”

Being able to better think through decisions is one of those tools. (You might call it wisdom, or clear thinking, though there’s more to it.)

And fortunately, the work I’ve been doing over the past 14 years (with lateral thinking in Smartcuts, neuroscience and human behavior, with The Storytelling Edge feat: Joe Lazer, and with team dynamics and synergy with Dream Teams) all naturally leads to this topic.

Through all this work—building on the good work of many others before me—I’ve become convinced:

To navigate rapid change such as is happening with AI, we need to lean into specific, uniquely human advantages.

But most of all, I’m sharing this with you today because I’m excited to announce that…

I’ve Launched A New Keynote Talk Out Of This Work (And A Book Is Coming!)

I’ve kicked off a speaking tour about how we humans and the companies we work for can use science, history, and human skills to navigate the next chapter of our work and lives. And though I am talking about different facets of this with different organizations, I call the stump speech: THINKING AHEAD.

As a sneak preview, here are some of the main ideas I’m speaking about:

Getting Out Of The Box - making sharper observations, asking better questions so we can extract unique value out of our tools and teammates.

Staying Out Of The Corner - creating optionality, using hypothesis-based reasoning as we explore solutions to problems.

Widening The Perspective - seeing clearly, seeing the bigger system and its second-order effects so we can apply powerful tools in better ways.

Building Capacity For Adaptability - habits for weighing options, letting go, and making decisions that let you move quickly—especially when it’s hard.

And how to use human skills such as emotional intelligence, storytelling, and trust to get groups of high-potential people to do all this together!

(FYI, for you, my Substack readers, I’m going to be sharing a lot of the above in this newsletter over the next year in the lead-up to the new book.)

I’ve been floored by the responses to this stuff so far. It’s a culmination of so much of my work.

If you know someone whose organization might want to dig into THINKING AHEAD, please pass the link along.

Until then, my best wishes to you. Be well, and stay away from gunpowder!

Shane

P.S. A few FAQs:

Q: Who is the THINKING AHEAD keynote for?

Teams navigating decisions around AI; organizations undergoing AI transformation; and smart people who want to leverage new technology instead of getting eaten up or left behind by it. See more details on my speaking page here »

Q: What if I just want to watch the talk myself, as one person?

In the next week or so, I’ll be posting a keynote talk that inspired this new one in the last year. I was invited to give a speech in Phoenix some time ago on Human Decision Making in the Age of AI, and out of that talk I developed THINKING AHEAD. Stay tuned for the decision-making video shortly. And I’ll be sharing newsletters about the sub-topics within THINKING AHEAD over the coming months.

Q: When is the new book going to be ready?

Oh man are you asking me this, too? Join my agent, my wife, and all my friends who are asking the same thing. 🙂 (It’s sometime in 2026, I think!)